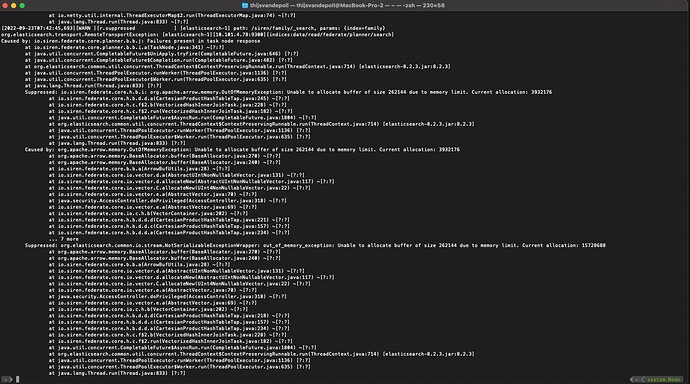

We are facing Out of memory error while firing a join query using a siren in our cluster. Below is the error that we are facing

What are the values set for the following node-level settings ?

- siren.memory.root.limit

- siren.memory.job.limit

- siren.memory.task.limit

If you can share, what is the query you are executing ? How many documents do you have in the “family” index, and any other index involved in the query ?

How can we get the default values of these memory limits as we didn’t set those values yet?

We are doing join between two indexes the primary index family is having around 80million record but the result set of join is below 100k. We are generally doing some aggregation query.

I can see that we can set these limit from off-heap memory and generally it should be half of the max off-heap memory. But I’m curious how to check what is the current limit.

I am firing the below API

GET _siren/nodes/stats/memory

But it is giving me the below output

"memory" : {

"allocated_direct_memory_in_bytes" : 0,

"allocated_root_memory_in_bytes" : 0,

"root_allocator_dump_reservation_in_bytes" : 0,

"root_allocator_dump_actual_in_bytes" : 0,

"root_allocator_dump_peak_in_bytes" : 1074724864,

"root_allocator_dump_limit_in_bytes" : 1073741824

}

By default, the offheap memory allocated is 1GB. You should set a value that better suit your cluster, see Configuring the off-heap memory :: SIREN DOCS

You can get the settings by executing GET _cluster/settings?flat_settings=true&include_defaults=true.

Thanks we have increased the limit and it worked